The Last Signature

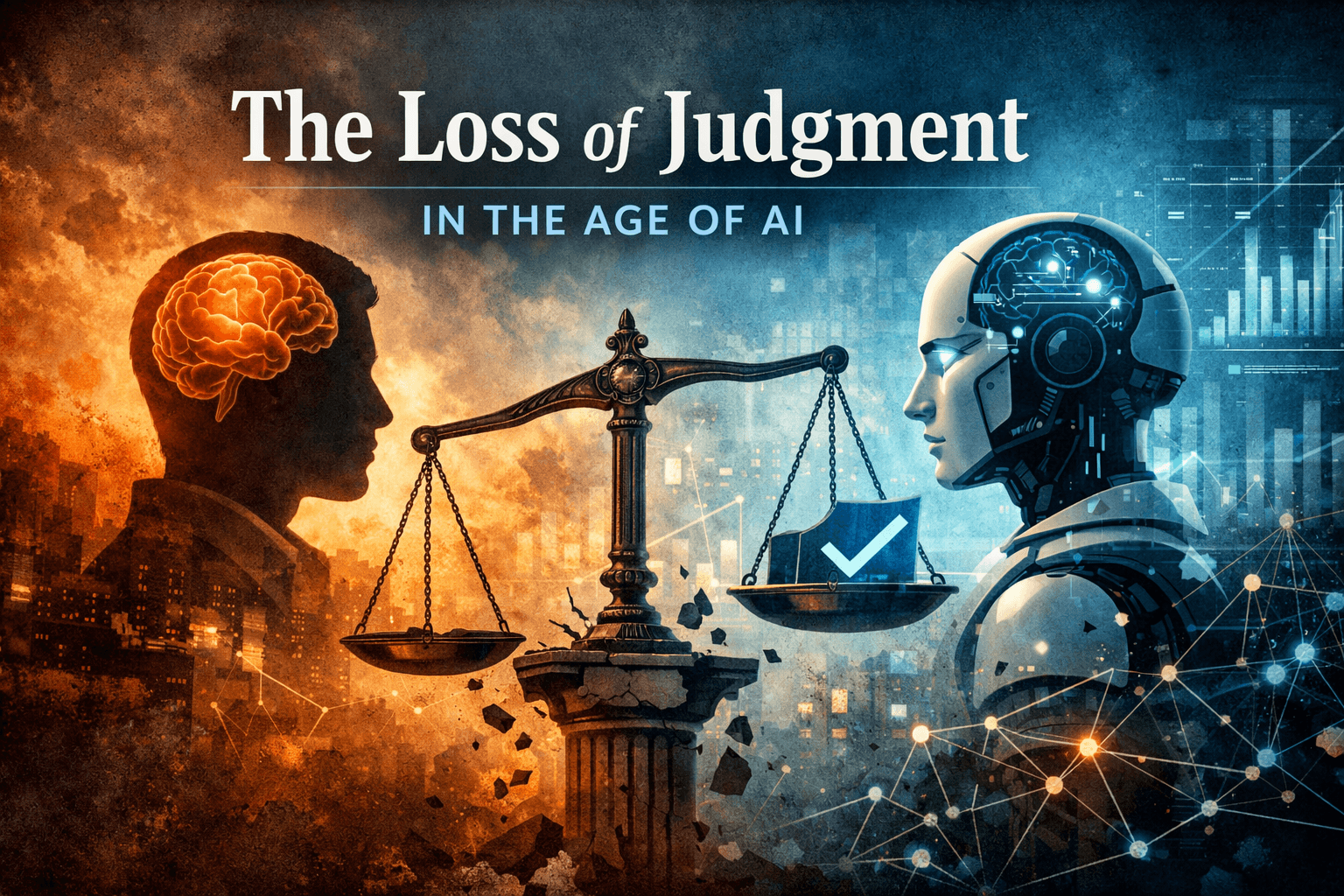

AI accelerates drafting reports and papers, but human review and approval cannot scale at the same pace. The real bottleneck is judgment—and most organizations have not designed for it. Generative AI has made production faster. But the human judgment required at the end of every workflow—the signature that accepts accountability—has not scaled. The result is a structural bottleneck that better models and stricter guidelines cannot solve.